The supported Tensor Core precisions were extended from FP16 to also include Int8, Int4, and Int1. The second generation of Tensor Cores came with the release of Turing GPUs. 1× Visualization of Pascal and Turing computation, comparing speeds of different precision formats - Source

#Nvidia fp64 how to#

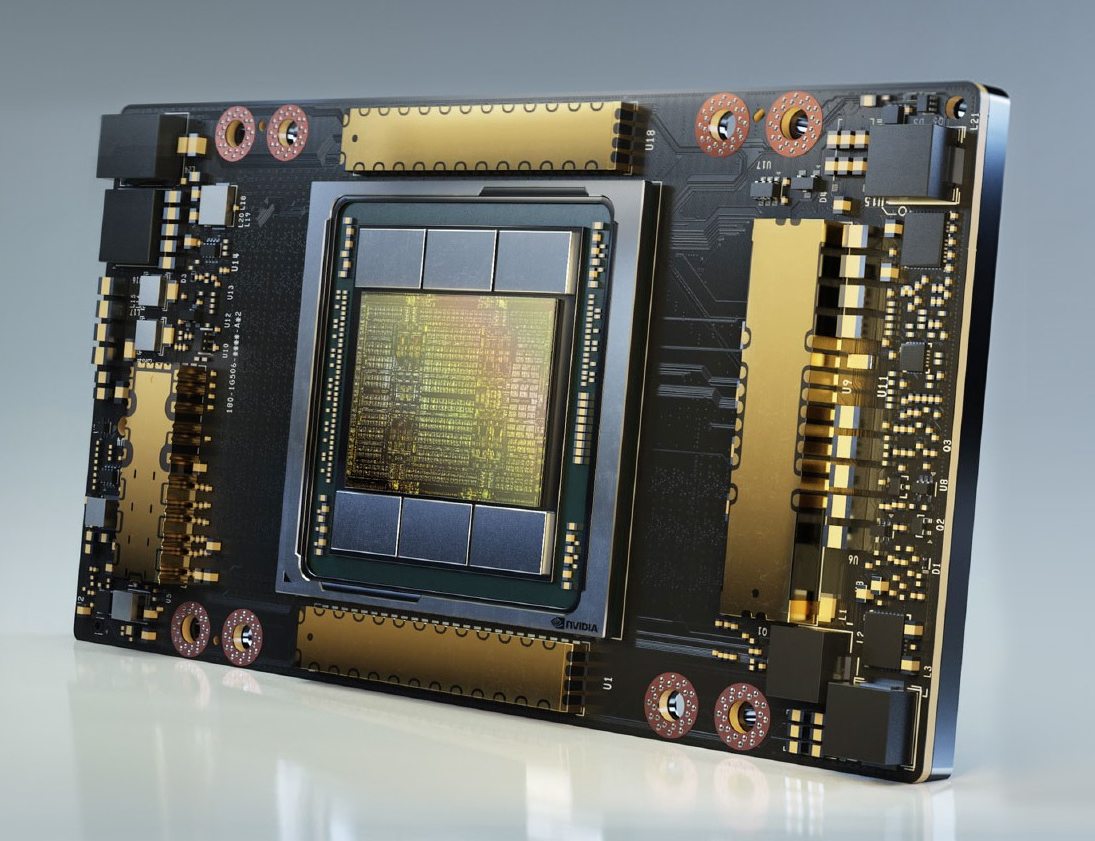

In the next section, we will discuss how each microarchitecture generation altered and improved the capability and functionality of Tensor Cores.įor more information about mixed information training, please check out our breakdown here to learn how to use mixed precision training with deep learning on Paperspace. (Source) With each subsequent generation, more computer number precision formats were enabled for computation with the new GPU microarchitectures. The first generation of Tensor Cores were introduced with the Volta microarchitecture, starting with the V100. Subsequent microarchitectures have expanded this capability to even less precise computer number formats! A table comparison of the different supported precisions for each generation of data center GPU - Source In effect, this rapidly accelerates the calculations with a minimal negative impact on the ultimate efficacy of the model. Mixed precision computation is so named because while the inputted matrices can be low-precision FP16, the finalized output will be FP32 with only a minimal loss of precision in the output. This allows two 4 x 4 FP16 matrices to be multiplied and added to a 4 x 4 FP16 or FP32 matrix. The first generation of these specialized cores do so through a fused multiply add computation.

Tensor Cores are specialized cores that enable mixed precision training.

What are Tensor Cores? A breakdown on Tensor Cores from Nvidia - Michael Houston, Nvidia To overcome this limitation, NVIDIA developed the Tensor Core. Because they can only operate on a single computation per clock cycle, GPUs limited to the performance of CUDA cores are also limited by the number of available CUDA cores and the clock speed of each core. Prior to the release of Tensor Cores, CUDA cores were the defining hardware for accelerating deep learning. Although less capable than a CPU core, when used together for deep learning, many CUDA cores can accelerate computation by executing processes in parallel. These have been present in every NVIDIA GPU released in the last decade as a defining feature of NVIDIA GPU microarchitectures.Įach CUDA core is able to execute calculations and each CUDA core can execute one operation per clock cycle. CUDA (Compute Unified Device Architecture) is NVIDIA's proprietary parallel processing platform and API for GPUs, while CUDA cores are the standard floating point unit in an NVIDIA graphics card. When discussing the architecture and utility of Tensor Cores, we first need to broach the topic of CUDA cores. What are CUDA cores? Processing flow on CUDA for a GeForce 8800. Readers should expect to finish this article with an understanding of what the different types of NVIDIA GPU cores do, how Tensor Cores work in practice to enable mixed precision training for deep learning, how to differentiate the performance capabilities of each microarchitecture's Tensor Cores, and the knowledge to identify Tensor Core-powered GPUs on Paperspace.

#Nvidia fp64 series#

In this blogpost we'll summarize the capabilities of Tensor Cores in the Volta, Turing, and Ampere series of GPUs from NVIDIA.

These specialized processing subunits, which have advanced with each generation since their introduction in Volta, accelerate GPU performance with the help of automatic mixed precision training. One of the key technologies in the latest generation of GPU microarchitecture releases from Nvidia is the Tensor Core.

0 kommentar(er)

0 kommentar(er)